Artificial intelligence (AI) is all everyone is talking about at the moment. Although the technology is not perfect (and may never be), and it is sometimes very amusing to point out “how wrong these computers can get things”, this is probably the wrong way to look at these innovations. As the saying goes, “the perfect is the enemy of good”, and as these smart technologies become increasingly integrated into our everyday lives, the outputs of these innovations will start to become even more impressive.

The thing is: these technologies do not need to be perfect, they just need to be either good enough to warrant the time it saves for someone to perform a sanity check on these outputs or (perhaps more worrying to some)… they just need to be better than the average human. With this in mind, some are expressing concerns about the growth of AI usage on multiple platforms, from job security to data protection.

“I don’t have to be faster than the bear. I only have to be faster than you.”

Currently, the main way the public is exposed to AI is through applications like Chat GTP – a very impressive, large language model-based chatbot, capable of generating human-like text. Although this may be seen as a parlour trick by some, it is hard to deny just how convincing some of the responses can be. In fact, over the past year or two, at the height of the hype surrounding AI, academics were even adding Chat-GTP to authors’ lists and acknowledgements sections on their peer-reviewed scientific publications. Whether this was for the reason of transparency, novelty, or just for their own amusement is hard to determine, but it does raise questions about the future of how we communicate science.

Working smarter, not harder?

At the moment; the science community is in a somewhat very precarious position. In a world where we all have the wealth of almost all human knowledge at our fingertips via the Internet, a worrying realisation is that not all information is created equal. Although the idea of fake news and disinformation has been well and truly cemented into many of us since the COVID-19 pandemic, it is now possible to create information on almost any topic, which reads and sounds credible but lacks any of the due diligence; featuring fake references, miss-represented quotes and false information, that even to the most well-trained fact checker, would be difficult to spot. All of that created with just a click of a button!

With content so easy to create now, are we setting back the world of the internet and knowledge sharing?

However scathing I am being about these chatbots, as a science communicator myself, and sometimes faced with creative blocks or tight deadlines, I cannot say that I have never played around with Chat-GTP. I have used it as a writing prompt tool, a way to workshop ideas, or as a jumping-off point for an entire article, and as I type this, I am worried that admitting this could place the validity of this entire piece, and everything I have ever written, at risk in the eyes of many.

However, as with all tools, I believe that AI can be wielded responsibly, and as more people start using programs like Chat-GTP as part of their regular toolkits, the time and effort saved will start to outweigh any doubts the community currently has in the long term.

In a survey conducted by Nature in 2023, they asked more than 1,600 researchers from around the world about their thoughts on AI technology and how they have used these tools in their own work. It was revealed that over a quarter of them utilise AI to assist them in writing manuscripts, while more than 15% employ this technology to aid in the creation of grant proposals. This is a double-edged sword in its own right, as these practices can free up a lot of time and reduce the workload of many busy researchers, without impacting the quality of their outputs, but also shine a light on how valid the current systems are. If many of the required documents for a successful grant proposal can be written by AI, is it time we rethink how these processes are conducted?

The examples above are just a few ways that AI technology has been and will continue to be used in the world of science and innovation. Machine Learning algorithms have been used in science for the past decade to create bespoke computer programs to conduct analysis of massive amounts of data that would take a single human lifetime to conduct, to save time by automating routine tasks, and even to make new discoveries. AI has already revolutionised science and research, but now it is in the spotlight.

Smart Technology?

The current state of play for much AI software is the data it has access to, and how the original code was designed by its programmers. Although many think that the outputs produced by an AI or similar system are impervious to bias and outside influence, this is by no means the case. A common phrase that is used for such examples is “garbage in garbage out”. If the creator of a program uses incomplete data sets to train the algorithms or the underlying code has been written without inputs from multiple viewpoints, inherent bias can be ingrained into these systems, and due to the nature of how AI systems are developed or “evolve”, reverse engineering these problems out of the system can be impossible. Additionally, current AI systems can be trained mostly to do a single specific task, very well, but any slight deviations from this one job may prove to be too much for the system to cope with. But, as this technology improves, these shortcomings will become an increasingly smaller problem in the future.

An Example of AI and Citizen Science

Another widely used tool that utilises AI technology is computer-generated images. Programs such as DALL-E and Mid-Journey can allow anyone to create a work of art in multiple styles by simply writing a short description, or adding elements to an already existing image. Although there have been issues and controversies with where the programs have received the data to create these images, and that art created using such tools emulates the work of professional artists, it certainly allows for a low barrier of entry for lay people to create images and visualise concepts.

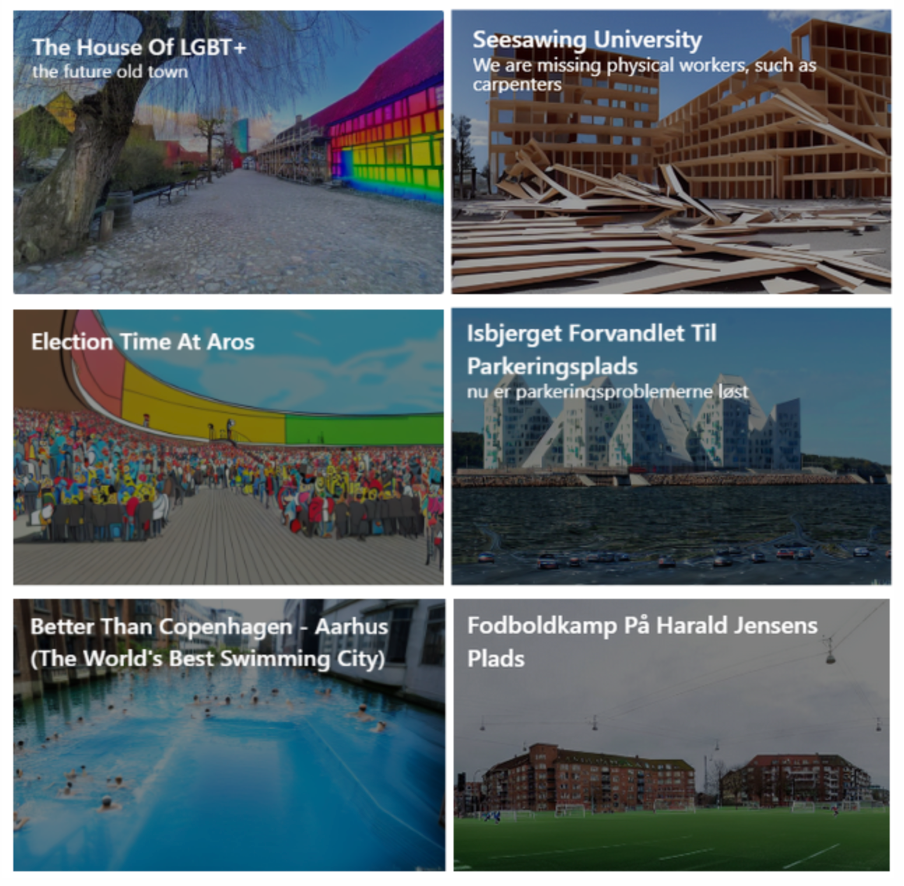

The Crea.Visions Initiative, led by the Aarhus University – Center for Hybrid Intelligence, has created bespoke AI software that uses a catalogue of photographs of cities to produce new images of urban environments. This allows members of the public to create dystopian or utopian visions of the cities they live in, as a means of visualising the potential effects of wicked problems, such as climate change, but also helps them imagine a better world for everyone!

This project works with the United Nations AI for Good, ArtBreeder, and Klimafolkemødet to raise awareness about the climate change challenge and the importance of reaching the UN Sustainable Development Goals (SDGs).

These images were used as jumping-off points to drive conversations with policymakers, researchers, and members of society on how we may work together to tackle issues we are facing in the world today and to tell stories on how science shapes our lives.

Over the past three years, Crea.Visions has conducted events in Venice, Paris, Belgrade, and at the Danish Climate Summit, where visitors could play interactive games that result in the creation of powerful pieces of art.

These images were then showcased at exhibitions and events, in the hope of driving more conversations about the actions we can take to avoid the dystopian and work our way towards the utopic.

Embrace Change

Now that the door has been opened, it cannot be closed. AI technology is here, and it is only going to become more prevalent. So, it is important that we accept this fact quickly, and although it is almost impossible to see where these innovations in technology will lead, we must do our best to stay ahead of the curve, in the interest of fully utilising the tools we have been given, and to make sure that the safeguards are in place to ensure that this is done in an ethical manner. This will involve the creation of new policies and recommendations for Research Institutes and science communicators to ensure that these tools are used responsibly. This step change will most likely result in the creation of new roles and expertise in these fields of work, which will act as a guiding light for how we should approach these new tools.

It is hard to know how exactly AI will change the research and innovation landscape. It may be the case that these innovations will plateau, with only minor improvements being made to the systems that we see today. However, it is probably more likely that, sooner or later, we will see other big steps in these technologies, and that AI will continue to play an increasingly critical role in research, as well as changing how information is shared. So, embrace new innovations, fling open the doors, and step through…………. Carefully!!

Written by Chris Styles, EUSEA Project Officer for IMPETUS

Image credits-

Feature Image by

Chat GTP Image By Mojahid Mottakin from Pexels

Fake News Image: Red Fake news button on white keyboard by Marco Verch under Creative Commons 2.0